Iterative buffer planning

The goal of planning is to keep our promises. It's not about keeping our people busy. Hold that thought.

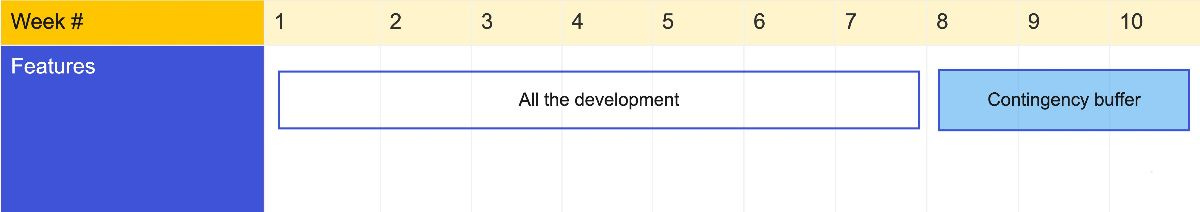

We know that estimates rot, projections are flawed, and events happen. That's why nobody in their right mind plans at total capacity. We always leave room for some slack in our plans. Traditional project management has two ways of incorporating a buffer: Round up the estimates or add a block of time at the end of the project.

The main issue with this kind of planning is that we create a negative domino effect where being behind on one feature means we have to catch up at the expense of another. What we can't see, however, is how far behind we are. Even after completing 80% of the tasks, we still rely on flaky estimates to guess how much longer it will take. The only way to know whether our buffer is large enough, is to not burn through it once we hit it.

These budget overruns are one of the reasons agile software development became so popular. We don't want to discover that we're over budget after 80% of the time has passed. We want to get constant feedback and adapt to the situation in real-time.

But planning in a feedback-driven world has similar challenges. Since there is no delivery date, we can't put a buffer at the end. We most certainly don't have a manager tweak the estimates a little. If teams pad the plan at all, it's by relying on gut feeling to under-commit the iteration. Rounding down sprint commitment is the equivalent of rounding up estimates.

In reality, most teams don't even plan a buffer. They carry over work to the next iteration: a negative domino effect. Two setbacks (or insights), and your long-term plan is bust.

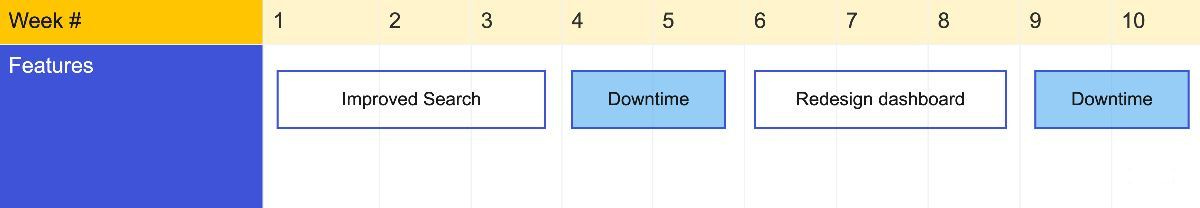

I prefer the alternative: adding a buffer after each product increment.

Each iteration has a focus or goal. During a fixed amount of time, the team will only work on this one problem. At the end of the timebox, the solution gets published. A great way of planning buffers is to have a "downtime" timebox after each iteration—e.g., three weeks on, two weeks off.

Downtime is a timebox where nothing gets planned. It's up to the team to use that time as they see fit.

Adding buffer room after each product increment is almost a silver bullet:

We don't have to drop everything for that customer request. We can keep focus and have downtime to work on it next week anyway.

Low-priority items that never get the urgency have a natural place in the plan.

The team has time to experiment and innovate rather than running on a hamster wheel.

The length of the buffer timebox becomes yet another parameter the team can tweak to find their optimal flow.

But most importantly: the buffer is a fundamental feedback mechanism. We constantly validate whether our plan is feasible or not. We have an early warning system and plenty of room to adapt.

If we spend most of our downtime catching up on last iteration's problem, we are over-committing. The cool thing is that we can determine our team's limits without impacting the long-term plan. Going over our timebox does not generate a domino effect. We learn and adapt.

In feedback-driven software development, a buffer gives us wiggle room to account for setbacks and insights. It also allows our team to innovate and guard quality. All while keeping our promises.